Can IIT Guwahati’s Deep Learning Sensor Transform Exhaling Air into Voice Commands?

Synopsis

Key Takeaways

- Innovative sensor converts exhaled air into voice commands.

- Facilitates contactless communication for voice-disabled individuals.

- Utilizes deep learning models for signal interpretation.

- Prototype cost is Rs. 3,000.

- Potential applications include exercise tracking and underwater communication.

New Delhi, Aug 4 (NationPress) Researchers at the Indian Institute of Technology (IIT) Guwahati have introduced an innovative underwater vibration sensor that facilitates automated and contactless voice recognition.

In partnership with scholars from Ohio State University, this breakthrough sensor presents a novel communication solution for those with voice disabilities who struggle with traditional voice-based systems.

The investigation centered around the air expelled through the mouth during speech—an essential physiological function.

For individuals unable to produce vocal sounds, the act of attempting to speak generates airflow from their lungs. When this airflow interacts with a water surface, it creates minute waves.

The underwater vibration sensor is capable of detecting these water waves and interpreting speech signals without the reliance on audible sounds, thus paving a new avenue for voice recognition, as highlighted by the research team in their publication in the journal Advanced Functional Materials.

“This design is unique as it recognizes voice by monitoring the water wave formed at the air/water interface due to exhaled air from the mouth. This method holds potential for effective communication for individuals with partially or completely damaged vocal cords,” stated Prof. Uttam Manna from the Department of Chemistry at IIT Guwahati.

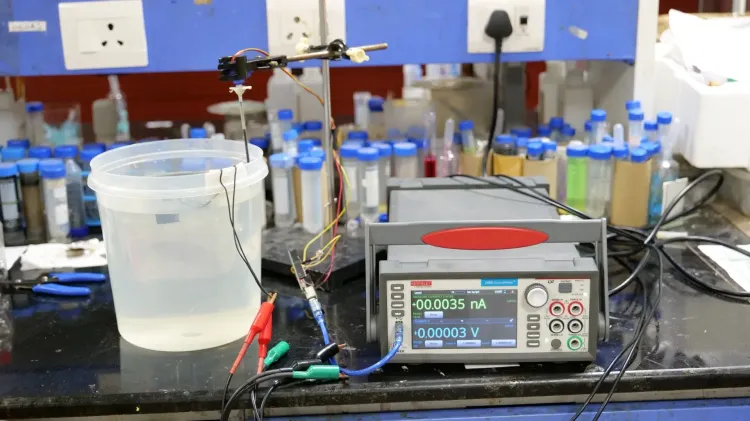

This advanced sensor is made from a porous sponge that is both conductive and chemically reactive.

Positioned just beneath the air-water interface, it captures the minuscule disturbances created by exhaled air and translates them into measurable electrical signals.

The research team employed Convolutional Neural Networks (CNN)—a sophisticated deep learning model—to accurately recognize these subtle signal patterns.

This configuration allows users to interact with devices from a distance without the necessity of sound production.

“Currently, the prototype costs Rs. 3,000 on a lab scale,” the team noted, emphasizing their pursuit of potential industry partnerships to transition the technology from laboratory settings to practical applications, which could lower costs.

Key features of the sensor include contactless communication for individuals with voice disabilities, AI-driven interpretation using CNNs, and hands-free management of smart devices.

In addition to voice recognition, this sensor can be utilized for tracking exercise and detecting movements. Its demonstrated durability, remaining stable after prolonged underwater exposure, indicates its potential for applications in underwater sensing and communication.