Can Large Language Models Predict Plant Gene Functions Accurately?

Synopsis

Key Takeaways

- LLMs can accurately predict gene functions in plants.

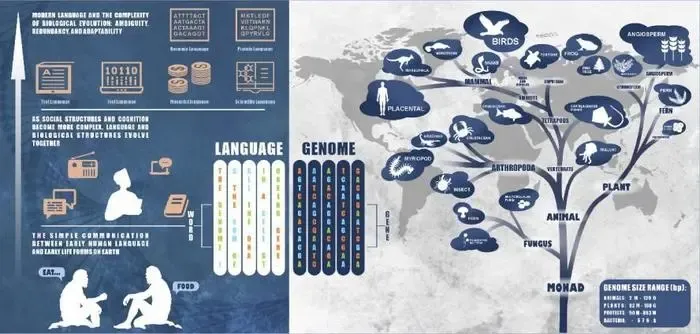

- They leverage similarities between genomic sequences and natural language.

- Enhancements in crop improvement and biodiversity conservation are possible.

- Traditional models are often limited by data availability.

- Research highlights the adaptability of LLMs across species.

New Delhi, June 1 (NationPress) Large language models (LLMs), when trained on extensive plant genomic data, can accurately predict gene functions and regulatory elements, researchers revealed on Sunday.

By utilizing the structural similarities between genomic sequences and natural language, these AI-driven models can interpret complex genetic data, providing breakthrough insights into plant biology.

This development shows potential for speeding up crop enhancement, improving biodiversity conservation, and reinforcing food security amidst global challenges, as noted in the study published in the Tropical Plants journal.

Historically, plant genomics has struggled with the complexities of expansive datasets, often hindered by the limitations of traditional machine learning models and a lack of annotated data.

While LLMs have transformed areas like natural language processing, their use in plant genomics has been in its infancy. The main challenge has been modifying these models to understand the distinct "language" of plant genomes, which significantly differs from human linguistic structures.

This study examined the capabilities of LLMs in plant genomics.

By paralleling the structures found in natural language and genomic sequences, the research illustrates how LLMs can be trained to comprehend and predict gene functions, regulatory elements, and expression patterns in plants.

The study discusses various LLM architectures, including encoder-only models like DNABERT, decoder-only models such as DNAGPT, and encoder-decoder models like ENBED.

The research team employed a strategy involving pre-training LLMs on extensive datasets of plant genomic sequences, followed by fine-tuning with specific annotated data to increase accuracy.

By treating DNA sequences like linguistic sentences, the models could uncover patterns and connections within the genetic code.

These models have demonstrated potential in tasks such as promoter prediction, enhancer identification, and gene expression analysis. Notably, plant-specific models like AgroNT and FloraBERT have been created, showing enhanced performance in annotating plant genomes and forecasting tissue-specific gene expression.

However, the study also highlights that most existing LLMs are trained on animal or microbial data, which frequently lacks comprehensive genomic annotations, showcasing the adaptability and strength of LLMs across various plant species.

In conclusion, this research emphasizes the vast potential of integrating artificial intelligence, particularly large language models, into plant genomics studies. The research was conducted by Meiling Zou, Haiwei Chai, and Zhiqiang Xia’s team from Hainan University.